In this post, we’re diving into a frequently asked question, what is low latency streaming and why does it matter? When it comes to broadcasting live events, especially sports, latency is a fundamental concept to understand as it can have a major impact not only on live production workflows but also on the final viewing experience. While video processing technology has greatly advanced since the beginning of digital television and OTT (over the top) video, we’re still fighting the battle of latency with solutions that promise low or ultra-low latency. Read on for a deep dive into the concept of latency, what causes it, why it matters, as well as tips on how it can be minimized.

What is Low Latency Video Streaming?

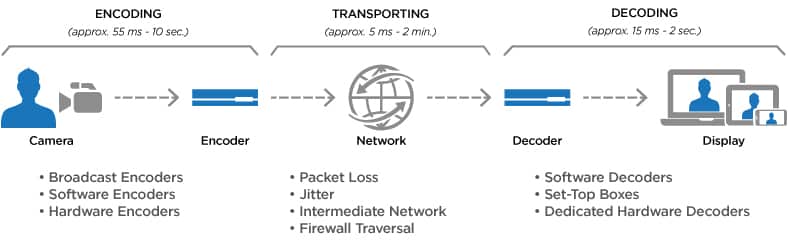

When it comes to low latency for video streaming, latency is defined as the time it takes for live content to travel from one destination to another. Broadcasters often refer to this as “glass-to-glass” or “end-to-end” latency; the total amount of time it takes for a single frame of video to travel from the camera to the final display. This time or delay can vary widely, typically 15-30 seconds for online content, under 10 seconds for broadcast television. Low latency is not defined by an absolute value, but in the broadcast and streaming industry, it’s often considered to be a few seconds or less. However, for live video contribution to production workflows, latency needs to be much lower, well under a second, before content is useable for live production, bi-directional interviews, and monitoring.

For a long time, studies suggested that the lowest perceptible limit for humans to correctly identify an image was around 100ms. However, more recent studies suggest that the fastest rate at which humans appear to be able to process incoming visual stimuli is as low as 13ms. For the purposes of this post, we’re using the terms broadcast, low and ultra-low latency to illustrate the differences for live video streaming.

Why Does Low Latency Streaming Matter?

While unreasonably high video latency presents a serious annoyance to users, especially when watching live sports, ultra-low latency is not always critical, it simply depends on your application. For certain use cases such as streaming previously recorded events, higher latency is perfectly acceptable especially if it results in better picture quality through robust prevention of packet loss. In linear broadcast workflows, for example, the delay between the feed and the actual live feed is typically somewhere around 10 seconds. The EBU, for example, defines “live” as seven seconds from glass to glass.

A short delay between broadcast production and playout may even be intentional to facilitate live subtitling, closed captioning, and prevent obscenities from airing. For OTT video streaming workflows, latency is typically 15 seconds to as much as a minute. However, when it comes to live broadcast production, especially when taking into account the increasingly decentralized workflow requirements of talent and staff working remotely, then latency needs to be kept as low as possible, under half a second and lower.

When is Low Latency Streaming Critical?

For live video production and streaming, keeping latency as low as possible is a must. Whether you’re producing live sporting events, esports, or interviews, nothing kills the viewing experience like high latency. We’ve all watched a live broadcast on location with long, awkward pauses, or people talking over each other in interviews because of latency issues. Or perhaps you’ve watched a hockey game online while your neighbor watches live over the air and you hear them celebrate the winning shot 10 seconds before you see it. Or worse still, imagine watching election results and they appear on your social media app before you even get to see it on your TV screen. In these cases, low latency assures an optimal viewing experience with great interactivity and engagement.

The key to low latency video viewing, within seconds, is ultra-low latency video production, within milliseconds.

Use cases where low latency streaming is especially critical include:

- Live sporting events: Capturing all the camera angles from a remote venue and including commentators and interviews requires close coordination of ultra-low latency video streams.

- Esports and gaming: Ultra-low latency video is critical when covering events where every millisecond matters with players dispersed across the globe.

- Bi-directional interviews: To keep conversations fluid and natural, video streams need to be kept at very low latency for both the interviewer and interviewee so that the total return time is under half a second.

- Real-time monitoring: With decentralized broadcast production increasingly the norm, remote production staff, including assistant directors, rely on ultra-low latency video to make quick adjustments seconds before content goes to air.

- Security and surveillance: For ISR and public safety applications, low latency video along with metadata, is critical for making split-second decisions.

- Remote operations: For video equipment operators such as replay and graphics to be able to work remotely, video streams over IP must be as close as possible to equipment monitors onsite.

- Decentralized workflows and remote collaboration: With talent and staff dispersed across geographies, having access to ultra-low video production and monitoring feeds is needed for seamless real-time collaboration.

What Causes Video Latency?

The issue of video latency isn’t caused by how quickly a signal travels from A to B alone, but also the time it takes to process video from raw camera input to encoded then decoded video streams. Many factors can contribute to latency depending on your delivery chain and the number of video processing steps involved. While individually these delays might be minimal, cumulatively they can quickly add up.

Some of the key contributors to video latency include:

- Network type and speed: Whether it be by public internet, satellite, MPLS, fiber or cellular, the type of network you use to transmit your video will impact both latency and quality. The speed of a network is defined by throughput or how many megabits or gigabits it can handle in a second as well as by the distance traveled. On an IP network, the total round-trip time (RTT) can be determined at any given moment by measuring how long it takes for a packet to travel back and forth to a specific destination by pinging an IP address.

- Individual components in the streaming workflow: From the camera to video encoders, bonded cellular transmitters, mobile apps, video decoders, production switches, and then to the final display, each of the individual components in streaming workflows create processing delays which contribute to latency in varying degrees. The latency of video streaming services, for example, is usually much higher than digital TV because video needs to go through additional steps such as ABR (adaptive bitrate) transcoding before being viewed on a device.

- Streaming protocols and output formats: The choice of video protocol used for broadcast production contribution and the outgoing viewing device delivery formats used also affects video latency. Not all protocols are equal and the type of error correction used by the selected protocol to counter packet loss and jitter can also add to latency as well as firewall traversal.

How Can Latency be Reduced?

There are several ways to minimize video latency without having to compromise on picture quality. The first is to choose a hardware encoder and decoder combination engineered to keep latency as low as possible, even when using a standard internet connection. The latest generation of video encoders and video decoders can maintain low latency (under 50ms in some cases) and have enough processing power to use HEVC to compress video to extremely low bitrates (down to under 3 Mbps) all while maintaining high picture quality.

Latency should also be considered when contributing live video using a bonded cellular transmitter. Some field units including the Haivision Pro 460 mobile video transmitter now features an ultra-low latency mode when transmitting over 5G networks, bringing latency down to as low as 70ms from camera to production.

Another key factor in achieving lower levels of latency is to select a video transport protocol that will deliver high-quality video at low latency over noisy, public networks like the internet. Successfully streaming video over the internet without compromising picture quality requires some form of error correction as part of a streaming protocol to prevent packet loss. Different types of error correction will all introduce latency, but some more than others. The Secure Reliable Transport (SRT) open-source protocol leverages ARQ error correction to help prevent packet loss while introducing less latency than other approaches including FEC and RTMP, though SRT can also support FEC when needed. With over 500 leading broadcast technology providers now supporting the SRT protocol, it has become the industry standard for low latency streaming.

When looking at minimizing latency, it’s important to carefully consider the impact of the configuration of the different components depending on the use case. There are always trade-offs to be made.

Achieving a higher quality for the end-user usually means higher resolutions and frame rates and therefore higher bandwidth requirements. While new technology and advanced codecs strive to improve latency, finding the right balance will always be important.

Ultimately, the individual targeted use case will determine the best balance within this triangle of video encoding and streaming considerations. For applications where low latency streaming is critical such as video surveillance and ISR, picture quality can often be traded in favor of minimal latency. However, for use cases where pristine broadcast-quality video matters, latency can be increased slightly in order to support advanced video processing and error correction.

The SRT streaming protocol, unlike RTMP, is codec agnostic and can support HEVC video for high-quality content, including 4K UHD video, at low bitrates and low latency. By delivering the optimal combination of bandwidth efficiency, high picture quality, and low latency, viewers can enjoy a great live experience over any network – with no spoilers.

Low Latency Streaming Solutions from Haivision

Haivision’s powerful Makito X4 video encoder and decoder pair offer broadcast-quality streaming at ultra-low latency. Fueled by the SRT low latency protocol, the pair are relied upon by organizations worldwide for their ultra-low latency, pristine quality, and rock-solid reliability for streaming of live content over IP networks, including the internet. In addition, by taking advantage of slice-based HEVC and H.264 video encoding techniques the Makito X4 video encoder and decoder can reduce overall end-to-end latency by a further 25% to well below 100ms.