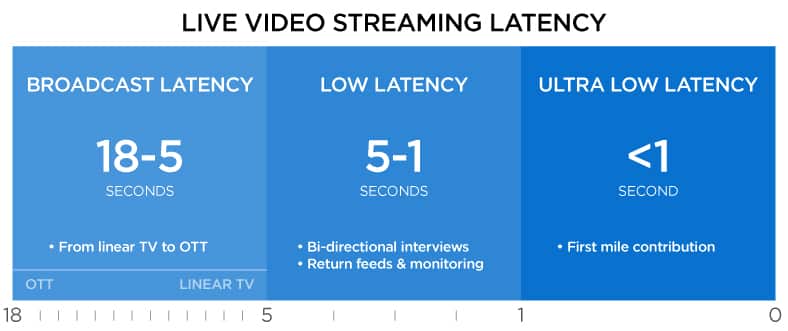

Broadband internet combined with highly efficient video compression, mainly HEVC, has brought the picture quality of OTT video on par with broadcast television. In some cases, such as with 4K UHD, OTT services are ahead of the game. However, there is one area where traditional broadcast television maintains an edge over OTT and this is latency, or the time it takes for an image to be captured and delivered to a screen.

Broadcast television latency is typically between 5-7 seconds which includes an intentional delay for preventing profanity and live production errors making it to air. In comparison, OTT services can take up to 45 seconds before a viewer sees the live content. The frustration of following an event almost a minute after it’s already taken place is an ongoing annoyance for viewers.

Reducing OTT Latency

For true sports fans, traditional television remains the best way to keep up with the action as it happens. By bringing OTT latency down to linear television levels, live OTT equivalents of Netflix can finally contend with traditional broadcasters.

Meanwhile, broadcasters can compete with OTT by offering second screen video applications. Using a mobile app or hybrid OTT set-top box, linear television broadcasters can provide access to different viewpoints for a more personalized viewing experience. This is only possible when OTT latency is on par with broadcast latency.

DVB-I

In Europe, the DVB-I initiative aims to bring a common viewing experience across any device, by bringing OTT latency down to broadcast television standards. DVB-I is based on DVB-DASH, itself based on MPEG-DASH, an industry standard protocol for Adaptive Bitrate (ABR) streaming of OTT content over HTTP, the data transfer protocol that underpins the World Wide Web. MPEG-DASH is widely used for streaming to Google Android devices including smart TVs and mobile phones.

Adaptive Bitrate Streaming

The other major ABR protocol is HLS, endorsed by Apple for iOS devices. ABR works by dividing video streams into segments, usually between 2-10 seconds long. Each segment represents a series of video frames encoded in h.264 or HEVC as a Group of Pictures (GOP). Several versions of the same segment of video, at differing levels of encoded video quality, are kept in store on an origin server residing on a Content Delivery Network (CDN). Every 10 seconds, or whatever the segment size, the viewing device will fetch the highest quality version that the network connection between the origin server and viewing device can handle at a given time.

Although this approach works well for VOD content, it does introduce significant latency for live video, as at least three segments are buffered at once, so, for example, a 10 second segment can add 30 seconds of latency.

CMAF

In 2016 Apple announced that it would support the fragmented version of the .mp4 container format for HLS streaming paving the way towards a new MPEG standard endorsed by both Apple and Microsoft, called CMAF. As both HLS and MPEG-DASH can now share a common container, .mp4, the focus has now shifted to CMAF’s ability to divide ABR segments into even smaller parts or chunks.

With CMAF’s low latency mode, devices no longer have to wait to receive an entire OTT segment, it can make use of HTTP 1.1’s specifications for chunked transfer encoding to start playing a smaller chunk of the segment right away and significantly reduce latency. For example, if a 10-second segment is cut into 10 chunks, then total origin to player latency including buffering can be reduced from 30 to 3 seconds.

Low Latency with HLS

Furthering the cause of low latency streaming is an open source community initiative called LHLS or low latency HLS, which applies MediaSource extensions and the hls.js Javascript library for streaming chunked video with HTTP 1.1 over standard servers and browser clients.

During Apple’s June 2019 World Wide Developer Conference (WWDC) the company with a habit of creating their own standards announced Low Latency HLS as a way to drastically cut back on last mile latency. Low Latency HLS aims to reduce last mile latency even further by relying on HTTP 2.0’s ability to push chunks or “parts” as Apple calls them, to the player, saving time by reducing the number of round-trip retrieval requests between players and servers.

First Mile vs. Last Mile

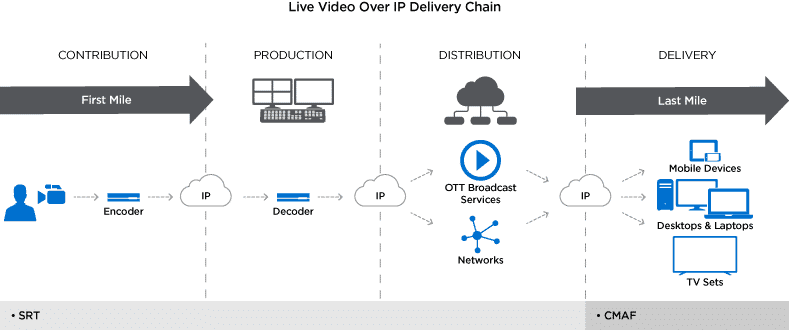

Although the battle for low latency video streaming is heating up at the last mile, where content is delivered to screens for viewing, the low latency win begins much earlier, at the first mile. When covering remote events, the first mile of streaming consists of capturing content from a camera, encoding it over H.264 or HEVC and streaming it over an IP network to a production facility. Also called broadcast contribution, this stage is critical in managing overall end-to-end latency.

SRT

HLS, MPEG-DASH, and CMAF are not applicable for first mile video streaming as they introduce too much latency for live broadcast production facilities. Instead, broadcasters are turning to SRT, an open source streaming protocol pioneered by Haivision. SRT includes some major features critical for first mile contribution including low latency packet-loss recovery and content encryption.

Just How Low Can You Go?

Once live content is produced, the next phase is to distribute it to different broadcast affiliates, rights holders, and OTT services. Once the streams have been distributed to an OTT transcoder and origin server, they can then rely on chunked transfer encoding and CMAF for delivering to end-viewer devices. The combination of SRT and CMAF was effectively demonstrated by Red Bee Media who managed to achieve glass to glass video streaming at under 3.5 seconds.

At this year’s NAB Show in Las Vegas, Haivision and Epic Labs demonstrated an SRT to CMAF live broadcast solution based on Haivision Hub and Microsoft Azure. An SDI video source from a camera was fed into a Haivision Makito X video encoder which compressed the content in HEVC and then streamed it from Las Vegas to Chicago using Haivision Hub on top of the Microsoft Azure fiber backbone. Epic Labs’ LightFlow hublet, within Haivision Hub, transcoded the SRT stream to CMAF and MPEG-DASH before sending it to a CDN for delivery to end-devices. The overall end to end latency of this SRT to CMAF solution was clocked at 3 seconds total.

To conclude, SRT combined with a low latency video encoder such as the Makito X4 can keep latency levels down to as low as 55ms or under 2 frames. By ensuring that latency is kept as low as possible during the contribution, production, and distribution stages of live video broadcasting, latency buildup is reduced and last mile low latency techniques such as CMAF and can be more effective in bringing OTT latency levels down on par with linear broadcast television.

To learn more about how SRT is fueling low-latency video transport over the internet, read our white paper, SRT Open Source Streaming for Low Latency Video.