Television broadcasting continues to evolve. There is a greater demand than ever for live events coverage, especially news and sports. This presents a challenge for broadcast engineers as they are tasked with delivering an increased amount and variety of live content without sacrificing the quality that their audiences expect.

The demand for content goes beyond linear television channels, as there’s always content that people want to see that doesn’t air on a show. Though this content is often pushed online through live OTT services, it can be difficult for broadcasters to justify the cost of producing this content. Because, make no mistake, online viewers expect their content to be broadcast-quality: they want it live, and they want dynamic coverage with multiple angles.

The Challenges of Remote Production

The use of multiple camera angles in a live broadcast poses a few challenges. You want to ensure that your transitions are smooth – if the angles are not synchronized, your viewers will notice the skip or the repeat in the action. Furthermore, you want to ensure that your audio is properly synchronized and pitched with the different camera angles.

Humans are actually quite perceptive when it comes to audio and visuals being synchronized, (such as matching the audio to someone speaking). The average person will notice if the audio is one frame ahead of the visual, or if the audio is two frames behind the visuals. You only have one frame of leeway between your audio and visual – you need to ensure that your feeds are synchronized.

When you are tasked with producing live content from remote locations, the workflow becomes more complex and costly. OB vans are not always readily available, sending feeds over the internet can be unreliable, satellite feeds are expensive, and private managed networks require significant investments of time and money to set up.

Multi-Camera Synchronization

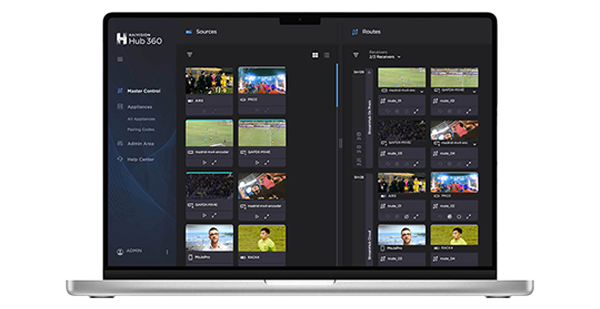

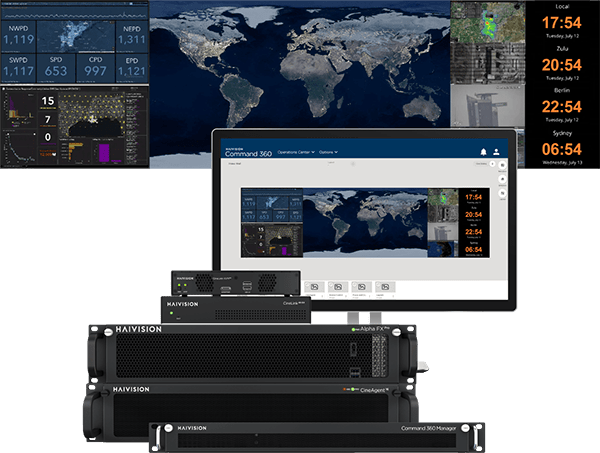

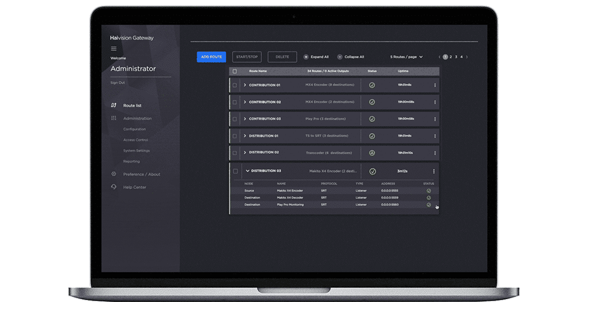

Fortunately, new technology is paving the way for an easier way for remote production over IP networks including the internet. With multi-camera synchronization, NTP synchronized encoders, connected to genlocked cameras, add a timestamp to each audio and visual feed. These feeds are then sent over the internet to the decoders at your main broadcast center or to a cloud-based live production workflow. The decoders, on-prem or cloud-based, compare the timestamps associated with each feed to the timing of the NTP server to which they are linked. This allows each decoder to synchronize their feeds to the cue of the NTP server, meaning that all contribution feeds will be kept in sync, regardless of the delay between the individual encoders and decoders. With live feeds decoded and synchronized, engineers can switch between cameras, without the worry of video glitches or loss of lip-sync.

The Benefits of Multi-Camera Synchronization

The benefits of multi-camera synchronization are clear for broadcasters. It is a low-cost, flexible way to manage live remote production from a central production facility. You have access to multiple live audio and video feeds, secure in the knowledge that they are in sync, without a prohibitive drain on resources. Since these feeds require fewer resources, broadcasters can produce more high-quality content than ever before.

These benefits extend beyond the domain of broadcast. Enterprises, nonprofits, and government agencies can now deliver broadcast-quality live event coverage without fewer resources, enabling viewers to better engage with their content.