Editor’s note: This is the third article in a series on remote production by guest author Adrian Pennington.

So, you’ve been tasked with streaming a live music concert for an OTT channel, but the budget you’ve got to play with isn’t huge. Well, you won’t be alone. Demand for live event streaming is heating up because audiences want it. And though the technology is here to make it happen, monetization still takes time to build.

Naturally you want the best coverage and quality of experience for your audience and you could really do without any hiccups.

To manage costs, it makes sense to send as few crew members as possible to the venue. Ideally, you’d use a lightweight video capture kit, of a couple of cameras and encoders, to transport all the feeds back to your production facilities over the internet for mixing and playout.

But you’re concerned that your internet connection may not be up to the task. And you’re right to ask. No-one should embark on remote production (REMI) without checking that the video equipment and infrastructure is going to work.

The luxury of dedicated dark fiber networks comes at a high cost or is simply not available at many event locations and regions. Running remote productions over the internet is the most cost-effective approach. However, bandwidth availability and performance is difficult to predict and can change at any given moment.

Here’s a checklist to consider when preparing your live stream:

Geographical challenges

If you’re sending streams from one location to another over a relatively short distance then its performance is going to be a lot more stable and predictable than routing over a longer distance. That goes double if you’re in one country sending content to a production facility in another country – it can throw up significant delays due to the many processing steps and multiple buffers along the signal path. Your stream is also going to have to contend with all the other traffic en route.

Using a local cloud PoP (point of presence), or a CDN (content distribution network) can reduce latency and improve performance but if you’ve travelled to another country and have not changed the encoder’s configuration to reflect the nearest local hop on point, that could be an issue.

Basically, the more network hops your stream takes the less control you have over the end product. Even streaming from an office in one city over a private WAN to another region has its risks. That’s why a streaming protocol like Secure Reliable Transport (SRT) is a good bet when streaming over distances.

What kind of bandwidth is available?

This boils down to the type of stream and the number of streams you require. An HD end product, for example, hogs less bandwidth than a 4K UHD stream for which you’ll need at least 18Mbps. Live sports, even in HD, probably need about 10 Mbps, a calculation that could be raised if you need higher than 60hz frame rates. And further still if you need more than one feed.

Which IP streaming protocol should I use?

The main internet protocols used for broadcast contribution are RTMP, which is not a secure stream, or UDP, which will not give you reliability. Your best solution is the open source SRT video streaming protocol. In an SRT configuration, the sender and receiver module has the ability to detect the network performance with regard to latency, jitter and available bandwidth. SRT integrations can use this information to guide stream initiation, or even to adapt the endpoints to changing network conditions.

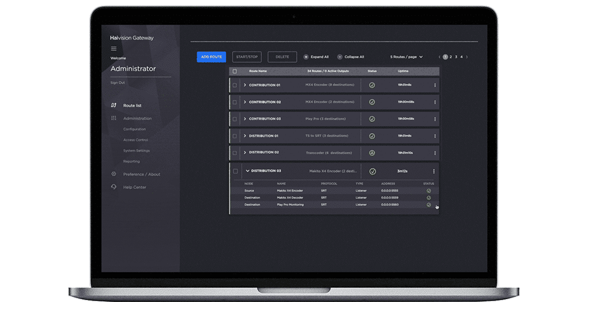

Transiting signals through a hub also increases end-to-end signal transit times and potentially doubles bandwidth costs by requiring two links to be implemented: one from the source to the central hub and another from the hub to the destination. By using direct source-to-destination connections, SRT can reduce delay, remove the central bottleneck, and lower network costs.

I’m still not convinced, won’t there still be a delay?

Latency bedevils a lot of live-streamed production and is often the cause of outrage among frustrated viewers on social media.

Live-streamed experiences need really low end-to-end latency to be viable. Using SRT guarantees that the packets that enter the network are identical to the ones that are received at the decoder, dramatically simplifying the whole process. SRT is designed for low latency video stream delivery over dirty networks.

It’s a common misconception, though, that lag is caused by internet carriage alone. It’s just as likely to be the video processing that takes place at either end of the network in the encoder and the decoder.

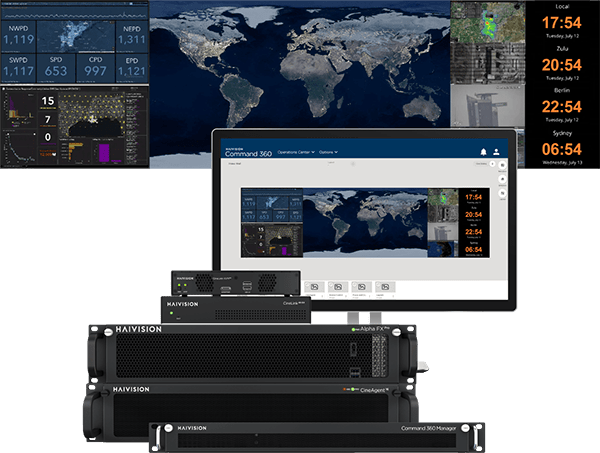

The solution is to ensure that the encoder is low latency and capable of addressing these issues, as is the case with Haivision’s Makito video encoder series.

What if the video and audio streams are out of sync?

Good question. If the video is not synchronized, switching between cameras can result in issues such as input lag and lip sync delay – disastrous for live streaming. With live video, timing is everything. This is why Haivision has a feature called Stream Sync, which ensures that live feeds are synchronized to within one frame. This means that downstream production equipment will not experience issues when switching between video and audio sources. For live production, this also means that any camera can be used with any audio track, with no noticeable video glitches or loss of lip-sync.

What other network issues can I expect?

Packet loss and delay variation (jitter) in networks can cause a variety of problems from drop-outs to glitches and downtimes. A managed IP network (MPLS or WAN) should have relatively low levels of jitter. However, this can vary greatly when using the public internet. Jitter will depend on location of the connection, geographical reach, as well as bandwidth. The more bandwidth, in general, the more room to compensate for jitter.

You don’t want any of this to get in the way of your audience’s enjoyment. Therefore, it’s important that the encoder used can compensate for jitter and recover lost packets. The SRT low latency video streaming protocol is designed to greatly reduce jitter and packet loss without impacting latency.

Will I be able to stream both ways in real-time?

Viewing return feeds are useful for a whole lot of reasons enabling your crew at the venue to view and collaborate with resources from production HQ in real-time. Real-time confidence monitoring is one typical example. Another might be to show the return teleprompter to roving presenters speaking to the camera or to use bidirectional video for remote interviews.

If this is a requirement then you need to consider both upstream and downstream bandwidth. Perhaps the network you are using is different for one or the other.

Either way, return feeds will double the amount of latency and therefore low latency is highly essential so that the round-trip return is close to real-time. High-performance encoders specifically designed for low latency and return feeds, such as the Makito X video encoder series, can round trip video feeds in under 200 milliseconds (with optimal network conditions.)

How do I know the stream is secure?

When relying on the internet, you need to keep your content safe from piracy. Proprietary digital rights management systems are suitable for end-viewer delivery, but not for live video contribution. AES is a widely accepted encryption standard used by both governments and broadcasters for sensitive content. AES can encrypt video streams with very large keys sized up to 256 bits to ensure that they are not accessible to unauthorized users. SRT is an open source protocol pioneered by Haivision that natively supports AES encryption. Using encoders that support SRT will ensure that your streams are delivered across an IP network securely.

How about contributing video to the cloud?

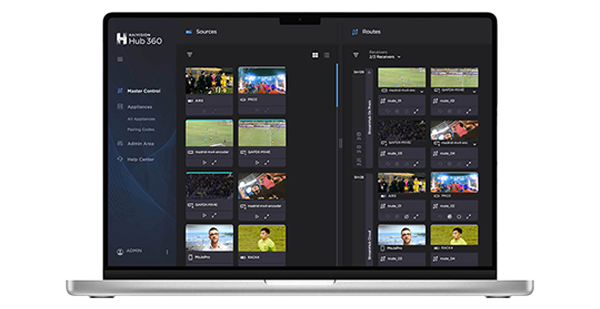

There are cases where you might want to send contribution feeds to a cloud-based video service or platform and increasingly this will be the case in future workflows. You would have to make sure though that all the key production tools that you would normally use, from switchers to graphic and logo inserters and compliance systems will be able to interoperate with each other in a virtual workflow.

It’s also important to consider what bandwidth is available between the venue site and the physical distance to the nearest PoP or datacenter. Also, you should ensure that the cloud service can accept a secure and reliable stream protocol.

Conclusion

We’ve covered a lot of ground, so let’s recap.

There are many things that can vary when using the internet for remote production, therefore it’s important to be able to adapt encoding to situations in real-time and have error correction tools (like the SRT protocol, for example) to handle any surprise glitches.

You may get to the remote site, having been promised a 100Mbps second link only to find it’s nowhere near as good. So, you adapt, and with an encoder that is flexible enough to handle HEVC and H.264 and to adapt to the internet conditions at hand, you can still get a high quality stream out of the venue.

At the same time, for a live event and certainly, for two-way interviews you need ultra low latency streaming which is where solutions like the Haivision Makito series of encoders and decoders, and SRT come into their own.

Recognize that the internet is inherently less secure than a managed network so if security is essential then select the protocols and the cloud provider which can handle encryption before you plug your kit into the local ethernet.